Interactive Demo

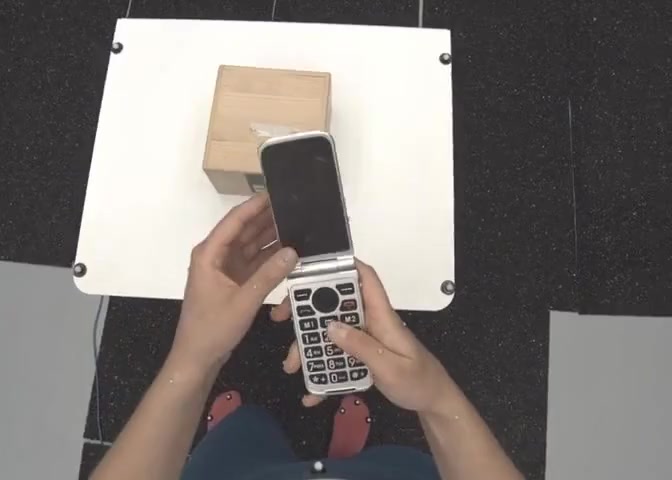

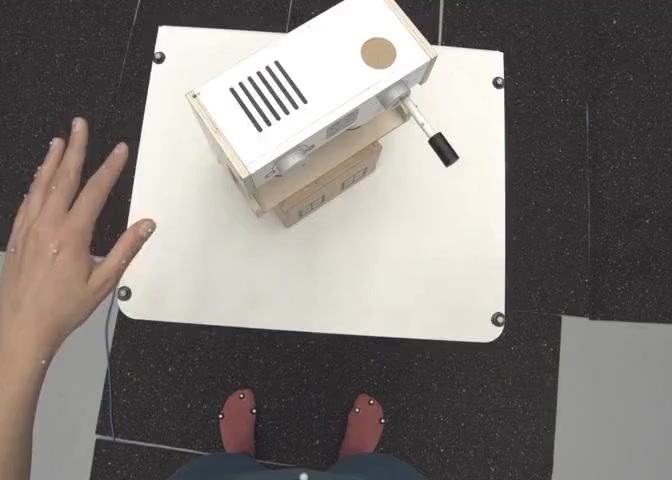

Multi-Object Manipulation

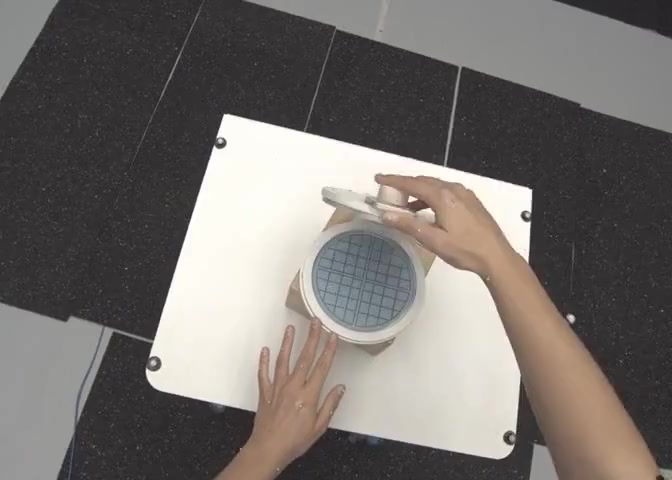

State-Changing Interaction

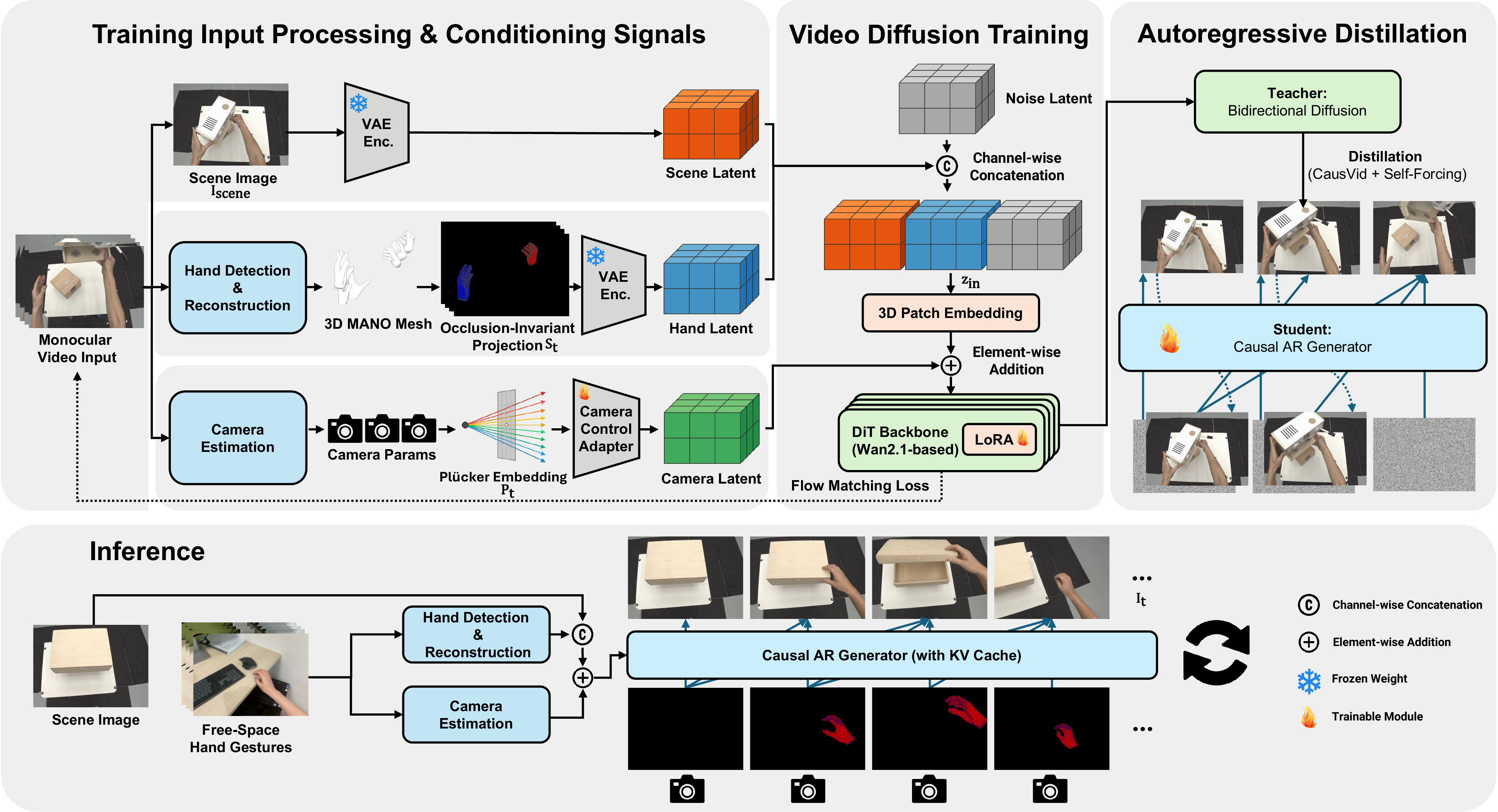

Egocentric interactive world models are essential for augmented reality and embodied AI, where visual generation must respond to user input with low latency, geometric consistency, and long-term stability. We study egocentric interaction generation from a single scene image under free-space hand gestures, aiming to synthesize photorealistic videos in which hands enter the scene, interact with objects, and induce plausible world dynamics under head motion. This setting introduces fundamental challenges, including distribution shift between free-space gestures and contact-heavy training data, ambiguity between hand motion and camera motion in monocular views, and the need for arbitrary-length video generation. We present Hand2World, a unified autoregressive framework that addresses these challenges through occlusion-invariant hand conditioning based on projected 3D hand meshes, allowing visibility and occlusion to be inferred from scene context rather than encoded in the control signal. To stabilize egocentric viewpoint changes, we inject explicit camera geometry via per-pixel Plücker-ray embeddings, disentangling camera motion from hand motion and preventing background drift. We further develop a fully automated monocular annotation pipeline and distill a bidirectional diffusion model into a causal generator, enabling arbitrary-length synthesis. Experiments on three egocentric interaction benchmarks show substantial improvements in perceptual quality and 3D consistency while supporting camera control and long-horizon interactive generation.

Select a method to compare with the ground truth. Drag the slider on comparison to reveal differences.

Select any two methods to compare. Drag the slider on the output to reveal each method. Hover to see labels.

@misc{wang2026hand2worldautoregressiveegocentricinteraction,

title={Hand2World: Autoregressive Egocentric Interaction Generation via Free-Space Hand Gestures},

author={Yuxi Wang and Wenqi Ouyang and Tianyi Wei and Yi Dong and Zhiqi Shen and Xingang Pan},

year={2026},

eprint={2602.09600},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2602.09600},

}